Navigating the Black Box Continuum: AI Agents vs. Automation

Artificial intelligence is no longer a science‑fiction novelty. A mid‑2025 PwC survey found that 79 percent of companies already use some form of AI agent and 88 percent intend to increase their AI budgets over the next year (Source: svitla.com). These numbers show how quickly autonomous technologies are moving from experimentation to mainstream deployment. Yet the road to value is not smooth. The RAND Corporation recently noted that more than 80 percent of AI projects fail — about twice the failure rate of non‑AI IT projects (Source: rand.org). Gartner goes even further, predicting that over 40 percent of agentic AI projects will be canceled by 2027, and warns that only a minority of vendors offer real agency while many are simply “agent‑washing” existing chatbots (Source: Gartner). These statistics illustrate a paradox: organizations are investing heavily in AI, yet most initiatives stumble.

The fundamental reason lies not in the technology itself but in how we define and apply it. Forrester analysts argue that businesses often bolt “smart” tools onto existing infrastructure without changing underlying processes, leading to poor orchestration and wasted investment (Source: cdotrends.com). RAND’s research adds that miscommunication about project goals and a focus on shiny technology instead of real problems are the leading causes of failure (Source: rand.org). In other words, confusion over what constitutes automation, AI assistance, and fully autonomous agents can doom a project before it starts. This article proposes a simple mental model – the black box continuum – to clarify these categories, explain the data and workflow requirements behind each, and show why making the wrong assumptions can have expensive consequences.

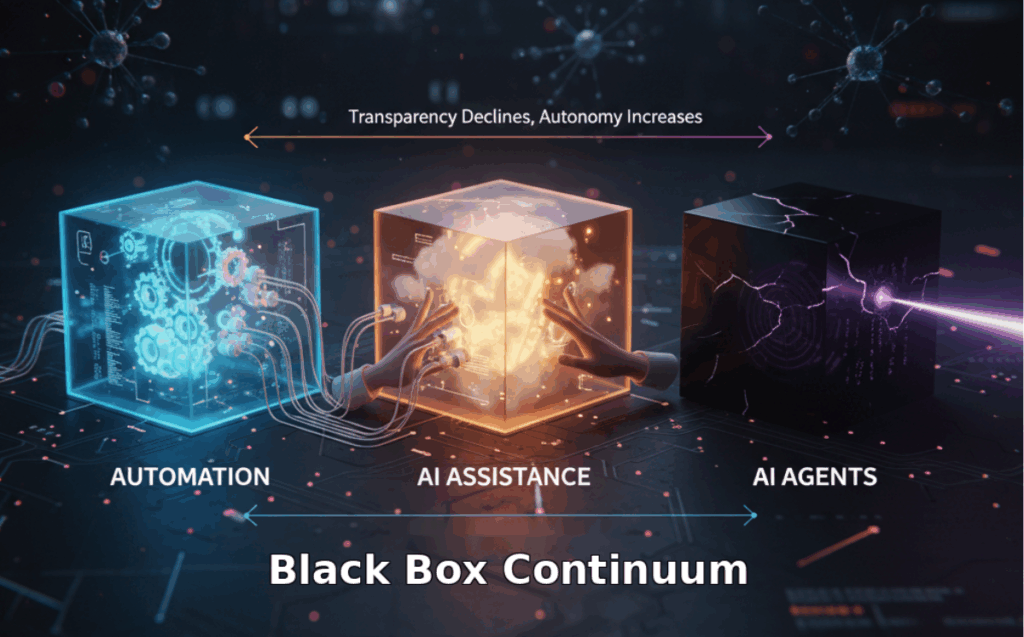

The Black Box Continuum

As AI systems become more autonomous, their inner workings become harder to trace. This is often described as a progression from a “glass box”—where logic and decision paths are transparent—to a “black box”, where the system acts on its own and humans cannot easily see how it reached its conclusions. The continuum below maps three broad categories—automation, AI assistance, and AI agents—against increasing autonomy and decreasing transparency. Use this mental model to evaluate your own initiatives: as you move toward the right, you gain adaptability but lose interpretability and must invest more in governance, data quality and infrastructure.

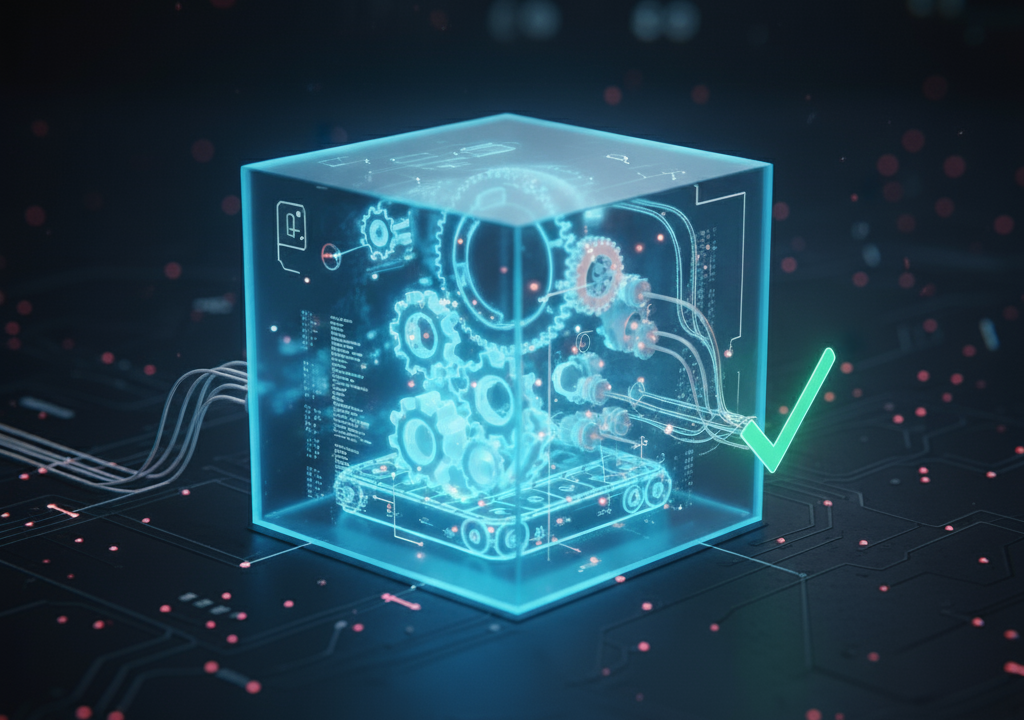

Traditional Automation — Transparent Logic

What it is. Traditional automation refers to rule‑based systems that execute predefined, linear workflows. As Svitla Systems describes, these processes thrive in environments with structured inputs and stable steps, such as invoice processing, payroll or routine IT ticketssvitla.com. Developers explicitly program each decision, so the system’s logic is fully transparent.

(Image credits: Google Gemini)

Data and workflow requirements. Automation relies on clean, structured data – think forms, tables or sensor readings. Workflows must be well understood and rarely change. Because logic is coded in advance, you need precise business rules and clear exception handling.

Transparency/Black‑Box Level. Automation is essentially a glass box: every decision follows an “if‑this‑then‑that” path. Audit trails are straightforward, and failures are usually due to incorrect rules rather than opaque reasoning. This makes automation ideal for compliance‑sensitive processes.

Example and potential pitfalls. Consider an accounts payable robot that reads invoices, matches them to purchase orders, and triggers payment if all fields align. If someone mislabels the invoice data or changes the file format, the bot will fail. However, the failure mode is clear and fixable – the logic is visible. Problems arise when organizations misapply rule‑based bots to unpredictable tasks (e.g., handling customer complaints written in free text). In such cases, bots break easily and may deliver little return, echoing industry observations that a significant portion of RPA initiatives fail to scale (Source: svitla.com)

💡 Practical Pro-Tips to get started 💡

- Prioritization – Picture Peter in accounting clicking through the same sequence of screens in the CRM and finance system every morning, entering the same invoice data or generating the same report. These highly structured, repeatable steps are ripe for automation. Start by listing out the tasks you can watch someone do from end to end without stopping to ask questions. Those are the low-hanging fruit.

- Process mapping – Imagine finding out that Sally in Sales downloads a CSV, adds a few notes on her laptop, prints it, scribbles comments, and then scans it back in. These “invisible” steps – local files, handwritten notes, faxes, printed emails – are like potholes on the road to automation. Before building bots, sit down with your team, draw the whole process on a whiteboard, and uncover those offline workflows that would otherwise trip up your robots. If re-run these workshops multiple time, run your bot in beetween, then validate if the result actually reflects the expected result.

- Preparation – Good automation begins with good housekeeping. Picture boxes of paper invoices stacked in the hallway closeet and five different spreadsheets with slightly different column names. Take time to digitize, organize, and standardize this data. A bot can do wonders once it has clean, structured inputs, but it can’t guess what “Inv. No.” means if sometimes it’s “Invoice #” or “Bill ID.” Clean it up first; your future self will thank you.

- Start with a pilot – Instead of trying to automate your whole back office at once, imagine a test kitchen. Pick one simple dish—say, automating the generation of monthly expense reports—and let the bot handle it end-to-end. Watch where it stumbles (maybe it can’t read a PDF or needs a manual approval), fix the recipe, and then scale the bot to handle more dishes. This way you learn in a controlled environment before rolling out a full menu.

- Don’t sweat every exception – In most offices there’s always that one weird invoice or edge case that happens once a year. Rather than paralyze your automation project trying to cover every possibility, picture a sticky note on your monitor that says “Ask Fred” for those oddball situations. Let the bot handle the 95 percent it can do consistently, and plan for humans to step in when something truly unusual appears. You can refine exceptions later.

- Human in the loop – As illustrated in the point above – even simple bots shouldn’t run entirely on autopilot. Picture an automated invoice processor that matches purchase orders and triggers payments. Most of the time, it whizzes through dozens of invoices without trouble. But whenever it hits an unusual amount or mismatched vendor name, it pauses and sends a quick notification to a finance manager. The manager glances at a dashboard, decides whether it’s a genuine discrepancy, and either approves the payment or flags it for review. That two-minute check prevents costly mistakes without bogging down routine tasks.

AI Assistance — Semi‑Transparent Co‑Pilots

What it is. AI assistance encompasses systems that augment human workers rather than replacing them. These tools – ranging from chat‑based copilots to intelligent search and classification engines – use machine learning to process unstructured data and generate suggestions. They still require humans to set goals, provide feedback and make final decisions.

(Image Credits: Google Gemini)

Data and workflow requirements. AI assistance needs more varied data than automation. Models are trained on large datasets (e.g., language corpora or domain‑specific documents) and may use techniques like retrieval‑augmented generation to incorporate external knowledge. Workflows must incorporate human oversight: outputs are drafts, not final actions. Because models are probabilistic, teams must plan for review and correction cycles.

Transparency/Black‑Box Level. These systems are partly opaque. While the overall input–output flow is known, the internal reasoning of large language models is not fully interpretable. As a result, they can produce hallucinations or biased outputs if not constrained. Logging and prompt design help, but some opacity remains.

Example and potential pitfalls. A support co‑pilot might read incoming emails, categorize requests and suggest responses. Humans verify and send the messages. If a team mistakenly assumes the assistant can fully automate responses without oversight, they risk sending incorrect or tone‑deaf replies. Misalignment between the model’s training data and the company’s brand voice can also lead to reputational harm. AI assistance is powerful but demands careful integration with human judgment.

💡 Practical Pro-Tips to get started 💡

- Tune it to your language – Imagine giving a generic language model your company’s internal jargon and letting it learn how you talk. Feed it examples of past emails, support tickets, or chat interactions. The goal is to make your AI assistant sound like a trusted colleague instead of a random chatbot. That way, when it drafts a reply or summarizes a meeting, it uses your tone and terms naturally.

- Output supervision – Think of the assistant as a junior team member who suggests answers but still needs a supervisor. Set up a workflow where the AI’s draft pops up for review before anything goes live. Someone glances at the draft, edits if necessary, and approves it. This avoids embarrassing blunders and builds trust in the system over time – by your employees as well as the customers.

- Track what it improves – Picture a simple dashboard where you can see that the assistant cuts average response time from ten minutes to two, or that it drafts five times more support emails per hour than before. Keep track of metrics like time saved or accuracy improvements. With hard numbers, it’s easier to justify further investment and improvements.

- Human in the loop – Treat your AI assistant like a junior team member. It can handle the heavy lifting, but you still need to review its work. Imagine your assistant takes the notes from a product brainstorming session and automatically compiles a summary with proposed next steps. Before sharing the document with stakeholders, the product owner reads through it, clarifies who owns each action item, corrects misunderstandings (for instance, an idea that was rejected during the meeting), and removes sensitive details that aren’t ready for broad distribution.

AI Agents — Opaque Autonomy

What it is. AI agents move beyond assistance into independent action. Forrester defines agentic AI as “advanced AI systems, powered by foundation models, that demonstrate a high degree of autonomy, intentionality, and adaptive behavior” (Source: Forrester ). These systems plan tasks, select tools, adapt to feedback and pursue goals without constant human prompting. They coordinate multiple steps—often across different applications—and learn from experience.

(Image Credits: Google Gemini)

Data and workflow requirements. Agents consume diverse data sources: structured, semi‑structured and unstructured. They rely on foundation models and access to external tools via APIs. Building an agent requires more than training a model; organizations must supply infrastructure for memory, planning and reflection as described by Forrester’s design patterns (Source: reprint.forrester.com). Agents also depend on orchestration frameworks that can invoke sub‑agents, monitor progress and handle exceptions (Source: cdotrends.com). It is vital to recognize that your AI agents interact in a world of agents, thus constraints need to be set for both sides – outgoing and incoming.

Transparency/Black‑Box Level. Agents represent the black‑box extreme. They may take actions or make decisions that are difficult to explain, even when logs are available. Gartner warns that this opacity means many vendors overpromise: over 40 percent of agentic AI projects could be canceled by 2027 (Source: xmpro.com). When dealing with AI Agents it is vital to set review processes identifiying AI agents’ (hidden) intentions, origin i.e. owning legal accountable entitites, etc.. Dataiku stresses that accountability is a major risk when establishing agentic AI and mitigating this risk requires cross‑functional controls such as explainability techniques, alerts and kill switches, and continuous monitoring (Source: blog.dataiku.com). In addition XMPro’s is arguing that “risk control goes beyond monitoring; agentic systems must embed ethical reasoning into their decision‑making processes” (Source: xmpro.com). The prediction underscores a critical risk of deploying agents without understanding their behavior or building the necessary governance. Meanwhile, agent access is getting increasingly easy through the Model Context Protocol (MCP), so adoption is currently outpacing the development of security practice (Source: businessinsights.bitdefender.com). Now, organizations are challenged to act in an unprecedented era – required to build governance frameworks quickly, even though best practices are still emerging.

Example and potential pitfalls. An AI agent for supply‑chain management might monitor inventory, identify shortages, place orders with suppliers and adjust logistics in real time. Without clear guardrails, such an agent could reorder stock based on faulty data, overspending and disrupting operations. Forrester analysts note that most enterprises lack the orchestration capabilities needed for true agency, making architectural re‑design a prerequisite for success (Source: cdotrends.com). When companies deploy “agents” as if they were simple bots, the result will likely be very expensive systems that cannot deliver on promises. In addition – how does your supply-chain AI agent ensure that it orders from the correct counterpart: even if your AI agent is only allowed to trade with approved suppliers – how can it be sure that it is not dealing with a mal-intending imposter agent? And how can your AI agent still take advantage of deals when they are offerd from non-approved vendors?

💡 Practical Pro-Tips to get started 💡

- Define a sandbox – Before giving an AI agent access to your live order system, imagine building a miniature replica. This sandbox has dummy data but mirrors real processes – inventory, suppliers, shipping rules. You let the agent learn and make decisions here first, so any missteps don’t impact customers. It’s like a flight simulator for pilots: mistakes are learning opportunities, not disasters.

- Set guardrails – Think of guardrails like the training wheels on a child’s bike. Even if the agent can decide which supplier to use or how much to reorder, you might insist that all orders over €1,000 still require a human click to confirm. Or you might forbid the agent from placing rush orders unless inventory drops below a critical level. Clear “do not cross” lines keep autonomy from turning into chaos.

- Monitor and log everything – Imagine a real-time feed showing every action the agent takes, along with a simple explanation: “Ordered 500 widgets because inventory fell below threshold.” Keep logs and dashboards that let you or an auditor trace back what happened and why. If the agent makes a mistake or you need to refine the rules, these logs will be your map back through its decision-making.

- Human in the loop – Even with agents acting autonomously, people need to set goals and intervene at key checkpoints. Imagine a logistics agent that monitors inventory levels and automatically places restock orders. Most of the time it works behind the scenes, keeping warehouses stocked. But when it senses an unusual spike in demand and suggests placing a significantly larger order, it escalates the decision to a supply-chain manager. The manager reviews market forecasts and approves or rejects the recommendation. This oversight keeps the agent from making risky moves while still letting it handle day-to-day planning.

Comparing the Three Approaches

The table below summarizes the key differences between traditional automation, AI assistance and AI agents. It highlights the data requirements, transparency level, examples and common failure modes for each. These concise distinctions can help product teams quickly assess which category best fits their needs.

| Aspect | Automation | AI Assistance | AI Agents |

|---|---|---|---|

| Data & Workflow | Structured inputs (forms, tables); deterministic rules; fixed workflows | Mix of structured and unstructured data; ML models; human‑in‑the‑loop workflows | Diverse data sources; foundation models; orchestration across tools; adaptive planning |

| Transparency | Transparent (glass box) | Semi‑transparent; some black‑box reasoning | Opaque (black box); autonomous decision‑making |

| Examples | Invoice matching; payroll processing | Drafting support emails; summarizing meetings | Supply‑chain optimization; automated tax preparation; vertical copilot for banking |

| Misuse Risk | Applying to tasks with unpredictable inputs leads to brittle bots | Assuming outputs require no review can cause incorrect or biased results | Deploying without proper governance or architecture leads to failures and cancellations |

Why Misdefinitions Lead to Failure

Clear terminology is not a mere academic exercise – it directly influences project outcomes. RAND’s study on AI project failures identifies misunderstanding or miscommunicating the problem to be solved as the most common root cause (Source: rand.org). When leaders frame an initiative as needing an “AI agent” but the underlying task simply requires automation or AI assistance, teams may build unnecessarily complex systems. This not only wastes resources but also increases risk. Other root causes, according to RAND, include lacking adequate data, focusing on trendy technology instead of real problems, insufficient infrastructure, and applying AI to problems too hard for current methods (Source: rand.org). All of these issues are exacerbated when expectations are misaligned with the chosen AI approach.

Mislabeling can also undermine governance. Automation can often rely on existing controls and audits. AI assistance demands monitoring of model outputs and human oversight. Agents require new layers of observability, role‑based access, and safety constraints to prevent harmful actions. Gartner cautions that many vendors tout “agents” without building these controls, leading to cancellations and waste (Source: xmpro.com). By insisting on precise definitions and aligning technology to real needs, leaders can avoid these pitfalls.

Trends, Warnings and Architectural Implications

Agentic AI offers tremendous potential, but only if organizations are prepared. Forrester researchers note that the shift to agents is not about adding yet another tool; it demands re‑architecting technology stacks and developing orchestration capabilities that most enterprises currently lack. Their surveys suggest that a growing number of global firms may need to completely redesign their systems to support agentic capabilities – an early estimate of 10 percent has already risen to 20 percent (Source: cdotrends.com). These findings highlight that adopting agents isn’t a plug‑and‑play exercise but a fundamental transformation.

Gartner’s latest forecasts illustrate the stakes. In addition to predicting the cancellation of a large share of agentic AI projects, Gartner expects that 15 percent of day‑to‑day work decisions will be made autonomously by 2028 and one‑third of enterprise software will include agentic capabilities (Source: xmpro.com). These projections show that agentic AI is poised to become ubiquitous. Yet Gartner’s emphasis on cancellations underscores that many organizations will fail if they don’t invest in governance, data quality and architectural coherence.

The takeaway is not to avoid agents altogether but to choose the right approach for the right problem. For routine, stable tasks, rule‑based automation remains a powerful tool. For dynamic workflows where humans need support, AI assistance can dramatically boost productivity. Only when tasks require independent planning, decision‑making and adaptation should organizations consider AI agents – and then only with the necessary investments in architecture, oversight and governance.

Conclusion: Clarity as a Competitive Advantage

The surge in AI investment shows no sign of slowing. PwC’s research suggests that executives are betting heavily on autonomous systems to drive efficiency and innovation (Source: svitla.com). But investment alone is not enough. Most AI projects fail because organizations misunderstand what they’re building (Source: rand.org). By viewing AI systems along the black‑box continuum, teams gain a simple but powerful tool for aligning expectations, workflows and governance:

- Automation is transparent and deterministic;

- AI assistance introduces probabilistic reasoning but keeps humans in control;

- AI agents act autonomously and demand robust architecture and oversight.

Clear definitions won’t eliminate every challenge, but they will prevent many. They help ensure that data and infrastructure match the needs of the task, that stakeholders share a common understanding of goals, and that governance keeps pace with autonomy. As Forrester puts it, agentic AI “enables AI to act rather than just think — and paves the way for more advanced and versatile general‑purpose AI‑based apps” (Source: reprint.forrester.com), but acting intelligently requires careful planning by humans. By navigating the black box continuum with intention, organizations can move from hype to sustainable impact – and avoid becoming yet another statistic in the long list of failed AI projects.